The era of intelligent automation has arrived, and power users are no longer satisfied with generic chatbot templates. They want full control, deep integrations, and agents that can reason, act, and adapt within their ecosystem.

This guide outlines how to design and deploy your own agent infrastructure using LLMs, workflow orchestration, toolchains, memory layers, and control planes such as MCP. If you are ready to move beyond basic prompting and into system-level architecture, this guide provides the blueprint.

Why Build Your Own Agent Infrastructure?

Pre-built AI tools are useful for simple tasks, but they often present limitations such as:

-

Limited integration with external services

-

Rigid workflows

-

Shallow logical reasoning

-

Limited scalability

-

Lack of monitoring and governance

Power users and advanced teams require more flexibility. Building your own agent infrastructure enables:

-

Full modular customization

-

Advanced logical control

-

Secure and governed environments

-

Deep integration with internal tools

-

Higher performance and reliability

This is not about building a basic chatbot. It is about architecting a system that can think and act intelligently.

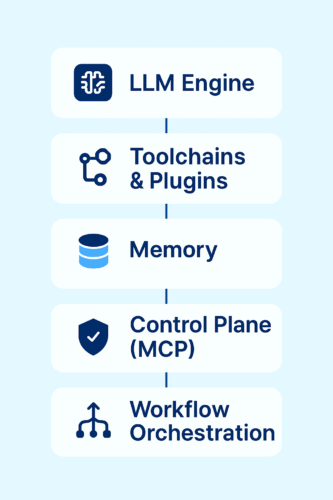

Core Components of a Modern Agent Architecture

To build a structured and scalable agent environment, each of these components plays a unique role. Together, they enable reasoning, memory, action, and control across the entire system.

1. LLM Engine (The Brains)

The LLM is the reasoning core of the agent. It interprets user inputs, understands context, and determines what actions should be taken.

You may choose:

-

Cloud-hosted models such as GPT-4, Claude, or Gemini

-

Self-hosted open-source models like Llama 3 or Mistral

Your selection depends on latency, cost, security requirements, and infrastructure capacity.

2. Toolchains and Plugins (The Arms and Sensors)

Agents must be able to execute actions, not only generate text.

Using orchestration tools like Flowise, you can build toolchains that give your agent access to:

-

Databases

-

APIs

-

Spreadsheets

-

File systems

-

Document processing

-

Search tools

Each node in Flowise represents a tool, condition, or action.

3. Memory Layer (Context Awareness)

A truly intelligent agent requires memory.

Vector stores such as:

-

Pinecone

-

ChromaDB

-

Weaviate

-

Qdrant

allow your system to store and retrieve contextual information. This enables:

-

Multi-turn conversations

-

Retrieval-augmented generation

-

Personalized responses

-

Long-term context retention

Without memory, an agent cannot learn, adapt, or handle complex workflows.

4. Control Plane (MCP or Custom)

The Model Control Plane acts as the DevOps layer for your agent. It handles:

-

Authentication

-

API key management

-

Model switching and fallback logic

-

Rate limiting

-

Usage logging

-

Monitoring and error tracking

-

Version control

This ensures the agent remains secure, scalable, and easy to manage.

5. Workflow Orchestration (Logic Layer)

This layer defines how the agent behaves and makes decisions.

Tools like Flowise or n8n allow you to create visual workflows that include:

-

Conditional branches

-

Loops

-

API calls

-

Custom functions

-

Output formatting

-

Multi-step sequences

With proper orchestration, the LLM becomes part of a functional system rather than just a text generator.

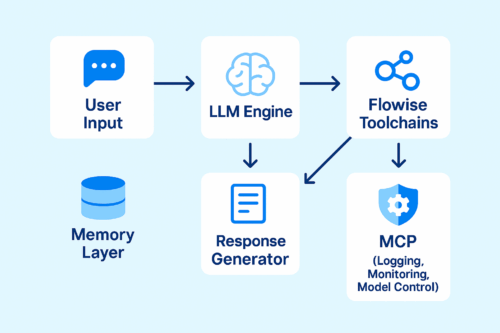

Visualizing the Architecture

To better understand how the different components work together, the diagram below illustrates the complete architecture of a modern agent system. It shows the flow from user input, through the LLM and toolchain layers, down to orchestration and control.

This architecture is fully modular, meaning each layer can be swapped, upgraded, or scaled independently. You can replace the LLM, extend toolchains, add new APIs, or enhance the memory layer without rewriting the entire system.

Getting Started: Build Your First Agent in Under One Hour

A simple but functional setup can be achieved quickly by following these steps:

-

Choose your LLM backend (OpenAI, Claude, or local Ollama).

-

Install Flowise locally.

-

Build your first workflow:

Input → LLM → Weather API → Output Formatter -

Add ChromaDB to store conversation memory.

-

Use MCP or a proxy layer to manage authentication, logging, and model routing.

Within an hour, you will have a fully operational starter agent.

Real-World Use Cases

The diagram below highlights four of the most common and powerful real-world use cases enabled by agent infrastructure. Each represents a functional area where intelligent agents significantly improve efficiency and performance.

A well-architected agent infrastructure can power multiple applications, from customer support automation to internal knowledge search and research assistance. These use cases demonstrate how agents combine reasoning, memory, and tool access to produce meaningful results in practical workflows.

Customer Support Agent

Connects to your CRM, answers inquiries, and escalates complex issues.

Sales Agent

Retrieves product data, pricing, inventory, and handles common objections.

Internal Knowledge Agent

Searches company documents, SOPs, and knowledge bases.

Research Copilot

Reads PDFs, performs academic searches, and summarizes findings.

Final Thoughts

Human-like AI isn’t just about answers — it’s about tone, timing, and the feeling of talking to someone who understands.

With Appgain, you’re not just building a bot.

You’re shaping a digital persona that sells, supports, and strengthens the way your brand communicates.

Want help designing an AI persona that feels natural and converts better?

Let’s talk.

🌐 Website:

https://appgain.io

📧 Email:

📞 Phone:

+20 111 998 5594

— and we’ll help you build and deploy your first high-performing AI persona in minutes.